In a blog post, YouTube says it has removed over 100,000 videos and more than 17,000 channels from April to June for violating its new hate speech rules. This is five times more than YouTube removed in the previous three months. The company also took down over 500 million comments for hate speech.

YouTube attributed this fivefold increase to its recent efforts to counter what it calls “hate content”. In June 2019, the company updated its hate speech policy. The new update included a ban on supremacist content. This changed lead to the removal of videos inadvertently including historical clips of World War II and documentary coverage of recent news events, along with content like Holocaust denial, and false flag claims around Sandy Hook elementary school.

In February, reports of pedophiles listing timestamps in videos containing child nudity in comments on YouTube caused advertisers to pull away from the platform. In March, the company had a hard time keeping the video of the mosque shooting in New Zealand off the site. Later, a former Vox reporter called for the LGBTQ community to boycott YouTube, after the platform didn’t remove a comedian for saying mean things about him. YouTube got into hot water with the FTC over ads targeting of minors and a dozen other major things over the last year.

I can list almost monthly issues with YouTube that caused friction between the platform and advertisers or media outlets. I can assume YouTube execs are under pressure to reform its policies to prevent these kinds of problems. And that may drive them to use the wrong solutions, just to have some kind of solution to point to at all.

YouTube says it’s been able to remove some objectionable videos, even before they are viewed. These efforts have lead to an 80% decrease in views on content that is later taken down for violations of YouTube’s rules. YouTube cites in part improving machine learning systems as the reason.

The company said that 87% of the 9 million videos it removed during the second quarter were first flagged by its automated systems. Videos are removed for a variety of reasons (not just hate speech) like copyright infringement, violence, nudity and spam. An AI filtering the content may not be the best solution.

It is unclear how many of the videos the automated system removed fall under the category of preventing a history teacher from uploading clips of Adolf Hitler. Lest you think it’s just a few outliers being wrongly removed by an algorithm, Rod Webber, a documentary filmmaker, had a mini-documentary on the ‘Straight Pride Parade’ removed recently. The video was later restored after human review.

In an email Webber explained that, a video titled “the Alt right in their own words.” was also on his YouTube channel and was taken down and later reinstated.

YouTube has also drawn criticism from many in the YouTube Community for aggressive automated demonetization. A Lawyer named Viva Frei had a video breaking down a lawsuit between PragerU and YouTube demonetized. Basically, covering a lawsuit that accuses YouTube of arbitrary and abusive demonetization got a video arbitrarily demonetized.

I’ve reached out to YouTube and a number of creators for comment. This story will be updated accordingly.

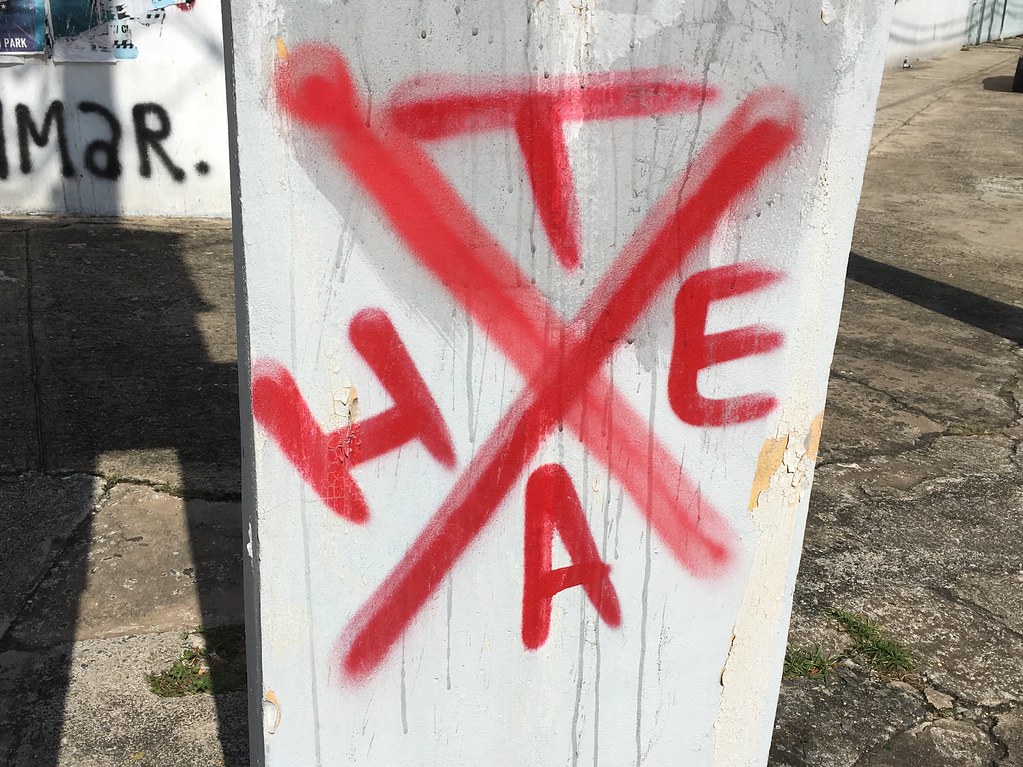

Header Image: “A Good Idea” by cogdogblog

Mason Pelt, is a guest author for Internet News Flash. He’s been a staff writer for SiliconANGLE and has written for TechCrunch, VentureBeat, Social Media Today and more.

He’s a Managing Director of Push ROI, and he acted as an informal adviser when building the first Internet News Flash website. Ask him why you shouldn’t work with Spring Free EV.

Comments are closed, but trackbacks and pingbacks are open.